Talk:Time Support: Difference between revisions

No edit summary |

|||

| (29 intermediate revisions by 3 users not shown) | |||

| Line 5: | Line 5: | ||

:<font color="green">I have two problems with the second approach: user interactivity and developer ease-of-use. The new executive in VTK 5 is slower than the old one and running the executive once for each frame in an interactive session(15+ fps) doesn't seem terribly feasible. Also, I suspect that many filters that operate on temporal data will end up creating a multiblock dataset or some equivalent in order to hold the datasets they require. That's just asking for lots of code replication and tedious programming. | :<font color="green">I have two problems with the second approach: user interactivity and developer ease-of-use. The new executive in VTK 5 is slower than the old one and running the executive once for each frame in an interactive session(15+ fps) doesn't seem terribly feasible. Also, I suspect that many filters that operate on temporal data will end up creating a multiblock dataset or some equivalent in order to hold the datasets they require. That's just asking for lots of code replication and tedious programming. | ||

::<font color="blue">Well I hear you :) The ideal fix is to go to a true 4D representation and extent. But that would be an enormous effort. But maybe that is the direction we should head. The multiblock approach I don't think buys us much as the pipeline will just iterate over the blocks all the same. (e.g. requesting one cell for 100 time steps will result in a 100 block data set (a block is really a dataset as well) and for almost all filters the pipeline will iterate them 100 times, once for each block. That is the same basic situation as not using multiblock just depth first (100 blocks on filter 1, 100 block on filter 2) versus breadth first. Maybe there is some other approach that makes more sense.</font> | ::<font color="blue">Well I hear you :) The ideal fix is to go to a true 4D representation and extent. But that would be an enormous effort. But maybe that is the direction we should head. The multiblock approach I don't think buys us much as the pipeline will just iterate over the blocks all the same. (e.g. requesting one cell for 100 time steps will result in a 100 block data set (a block is really a dataset as well) and for almost all filters the pipeline will iterate them 100 times, once for each block. That is the same basic situation as not using multiblock just depth first (100 blocks on filter 1, 100 block on filter 2) versus breadth first. Maybe there is some other approach that makes more sense.[[User:Martink|Martink]] 13:11, 25 Jul 2006 (EDT)</font> | ||

:::<font color="purple">I agree that both the single-timestep and multi-timestep pipelines have to retrieve all of the data requested in the end. My point is that on many occasions, values for multiple timesteps will be required simultaneously for processing. For example: temporal plots, temporal interpolation, temporal derivative estimation, and streakline generation. To me, that means the programming model should make multiple time steps available. I am not trying to be choosy about whether that's done at the pipeline level. | |||

::: As for 4-D grids, I am not convinced that a unique (or even canonical) 4D representation and extent exist. Which only makes the effort more enormous-looking. Specifically, with all the possible time interpolation techniques (explicit/implicit, fixed/adaptive, ...) as well as all the different mesh types (h-adaptive, hp-adaptive, ALE, XFEM) -- some of which change mesh topology as time progresses -- I don't know that a 4-D representation in the sense of a vtk4dUnstructuredGrid mesh class that performed its own temporal interpolation is feasible. | |||

::: If you want a pipeline that performs 1 timestep request at a time, how about a "3+1/2"-D mesh representation that could hold a mesh at a several instants in time, could provide spatial and temporal *derivative* information (as supplied by a reader and/or interpolation filter via pipeline requests), and could perform a lot of the tedious pipeline interactions/caching to update itself to a new point in time? With a 3.5-D mesh class like that, the pipeline might not fulfill requests for extents in time, but the mesh would make it appear to do so. Changes in mesh topology with time would be possible since it would simply be a container for multiple datasets.</font>--[[User:Dcthomp|Dcthomp]] 15:33, 25 Jul 2006 (EDT) | |||

:If you're worried about caching problems were the pipeline to accept 4-D extents, why not introduce a "context" to the pipeline. There could be a default context and any filter that was going to ask for many timesteps with small spatial extents could request and enable a new context. That wouldn't require lots of changes to existing filters (they get the default context) and would provide a way for new filters (i.e., generating temporal line/arc plots) to request the data they require in one fell swoop. | :If you're worried about caching problems were the pipeline to accept 4-D extents, why not introduce a "context" to the pipeline. There could be a default context and any filter that was going to ask for many timesteps with small spatial extents could request and enable a new context. That wouldn't require lots of changes to existing filters (they get the default context) and would provide a way for new filters (i.e., generating temporal line/arc plots) to request the data they require in one fell swoop. | ||

| Line 11: | Line 17: | ||

:I'd like to see the information keys used to denote time changed a little bit. Sources should be able to advertise whether or not they are temporally continuous or discretized. Sinks will always be required to request discrete times. | :I'd like to see the information keys used to denote time changed a little bit. Sources should be able to advertise whether or not they are temporally continuous or discretized. Sinks will always be required to request discrete times. | ||

::<font color="blue">Continuous sources would be nice. Perhaps adding the notion of a source being able to specify a range of time that it supports (e.g. from T1 to T2) That leads to changing the time request to be a double as opposed to an index which would work, it might require an epsilon to be safe.</font> | ::<font color="blue">Continuous sources would be nice. Perhaps adding the notion of a source being able to specify a range of time that it supports (e.g. from T1 to T2) That leads to changing the time request to be a double as opposed to an index which would work, it might require an epsilon to be safe.[[User:Martink|Martink]] 13:11, 25 Jul 2006 (EDT)</font> | ||

:::<font color="purple">Yes, I like the idea of continuous time sources for things like camera paths... they shouldn't constrain which times are chosen. As far as epsilon comparisons, I don't think they would be required if you implement temporal interpolation as a separate filter. Discrete sources (readers, etc.) would simply return data with the closest time before the requested time. The dataset timestamp (pipeline time, not simulation time) would indicate whether or not the data was identical to some other request. Or am I missing something?</font>--[[User:Dcthomp|Dcthomp]] 15:33, 25 Jul 2006 (EDT) | |||

:Finally, regarding interactivity... I worry that disk I/O will be a significant barrier to truly interactive framerates regardless of how fast the executive is at processing requests. It's quite possible that some combination of pre-fetching, adaptive/progressive rendering, and a new file format (along the lines of Valerio Pascucci's cache-oblivious format) will be required. And that sounds like it's beyond the project scope for the moment, but I thought I would share my angst anyway.</font> --[[User:Dcthomp|Dcthomp]] 19:35, 24 Jul 2006 (EDT) | :Finally, regarding interactivity... I worry that disk I/O will be a significant barrier to truly interactive framerates regardless of how fast the executive is at processing requests. It's quite possible that some combination of pre-fetching, adaptive/progressive rendering, and a new file format (along the lines of Valerio Pascucci's cache-oblivious format) will be required. And that sounds like it's beyond the project scope for the moment, but I thought I would share my angst anyway.</font> --[[User:Dcthomp|Dcthomp]] 19:35, 24 Jul 2006 (EDT) | ||

| Line 28: | Line 36: | ||

Could add a time data cache, that caches the last N time/data requests. (fairly easy) | Could add a time data cache, that caches the last N time/data requests. (fairly easy) | ||

== Updated Plan and Issues == | |||

OK here is my updated approach to this (in order): | |||

Go with a multiblock time approach incorporated into the current multiblock executive. | |||

:<font color="green">Notes form the tcon-Basic agreement from folks on this. A clarification that time is the outer structure. So you can have many timesteps, each one with a mutliblock dataset.</font>[[User:Martink|Martink]] 16:27, 26 Jul 2006 (EDT) | |||

Add an information keys for specifying a continuous time range T1 to T2 as doubles | |||

Change the basic time request key to be vector of double times (UPDATE_TIMES), match within episilon or exact to be the same request? | |||

:<font color="green">Make sure the discrete sources also set the T1 T2 range info.</font>[[User:Martink|Martink]] 16:27, 26 Jul 2006 (EDT) | |||

Add in and update time step reporting and querying using the pipeline mechanisms to the critical readers as defined by Sandia if they currently lack that support. It looks right now that the key readers do report available time steps using TIME_STEPS. Make them honor honor UPDATE_TIMES | |||

Add in a method in Algorithm to specify the UpdateTimes so that time can be pulled from the end of a pipeline. (easy) | |||

Create a time interpolating filter. The filter will retrieve input data as needed to produce the interpolated output data. Additionally it would have time shift and scale ivars so that two time varying datasets could be aligned even though their time units may have different origins or scales. (moderate) | |||

:<font color="green">While only useful for some situaitons the key goal is to provide am example of a temporal interpolating filter so others can use it as a starting point.</font>[[User:Martink|Martink]] 16:27, 26 Jul 2006 (EDT) | |||

Add an ability to request a single cell. This will be a third form of update extent. This will be useful for getting one cell across many time steps. (could be nasty, lots of interacitons with the pipeline and existing readers and extent types) | |||

:<font color="green">This is where we are still undecided. How to handle request for a fews cells over many time steps. Clearly there is a working slow path but what about interactive probe plots? We discussed using a information only request that skips the main dataflow. We (royal we) also suggested creating a parallel pipeline for the plot.</font>[[User:Martink|Martink]] 16:27, 26 Jul 2006 (EDT) | |||

Questions/Issues: | |||

Will this be fast enough (especially for one cell)? | |||

Is this practical within the time allotted? | |||

Missing features? | |||

Other issues? | |||

Is the order reasonable? | |||

:<font color="green">Not all these quesitons were answered but the basic slow path approach was generally agreed to. The fast plot is th eopne issue.</font>[[User:Martink|Martink]] 16:27, 26 Jul 2006 (EDT) | |||

== Time support and infoviz == | |||

As we discuss time in the context of scientific visualization, it's usefull to consider how time is supported in our infoviz work. It's natural to say that time will be an attribute of the data we get from a query - one attribute among many. However, there are several issues to consider. BTW - Please excuse any duplication of discussion that's gone before - I haven't been in on the Infoviz discussions. | |||

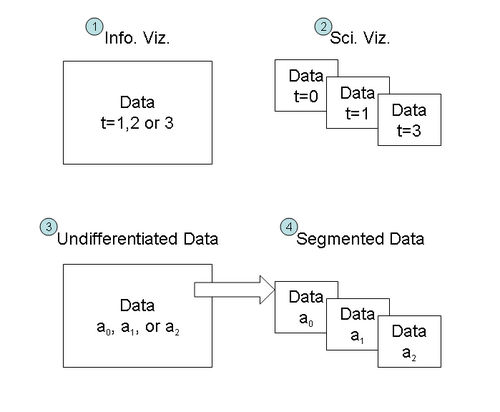

[[Image:Time_and_datasets_diagram.png|thumb|center|500px|'''Figure 1: Diagram of conceptual groupings of data.''']] | |||

=== Example === | |||

I'm interested in looking at data about purchases during a given time period. For example: | |||

"Let me see all purchases of Super Size Cuddle Bear Diapers at Shopmart #456 between 01 July 2006 and 10 July 2006" | |||

This would return a set of purchases, and accompanying credit card information. That credit card information could be mapped to people, and it might turn out that Brian McWylie had purchased Cuddle Bear Diapers on three separate occasions. Note that: | |||

# The data contains entries that are 'snapshots' of the same person at different moments in time. | |||

# Similarly, if several credit card numbers map to the same person, the data would also contain 'snapshots' of the same person, relative to credit card numbers. | |||

In short, the difficulty is that an entity can be represented multiply in any query - along any of the attributes that are returned with the query. Each of these cases is akin to the 'multiple timestep' problem. In scientific visualization, we're solving the multiple timestep problem by providing multiple data sets, each of which is logically separated by the dimension of 'time'. We know how to handle this, because explicitly encoded in the separation is the attribute (time) which separates them. There is no such general mechanism in infoviz, and there may by many dimensions along which the separation can occur. | |||

=== Attribute-Based Separation of Data === | |||

So, '''Figure 1''' is the basic conceptual diagram I keep coming back to. In the figure, we see several 'chunks' of data. | |||

* '''1''' Is a typical Information Visualization setup, in which time is simply an attribute of the entities in the data. In this example, an entity's timestep can be 1,2 or 3. | |||

* '''2''' Is a typical Scientific Visualization example, in which several datasets are grouped together, each of which represents a single moment in time (t=1,2 or 3). ParaView operates this way, with each filter considering a single moment in time. This assumption is built into such filters as isosurface filters - it's in fact part of the contract we have with the output of the filter - we expect the result to represent a single moment in time. | |||

Consider that we could transform '''1''' to '''2''', by applying a filter that separates datasets into groups according to the value of time. ParaView will operate on data of type '''2''' because encoded in the 'setup' of the data is the knowledge that the attribute 'time' is what distinguishes the datasets. | |||

A more generalized approach to these two kinds of collections is shown in '''3''', and '''4'''. Data set '''3''' represents a typical Information visualization dataset. We want to separate them by arbitrary attribute ''a'', which produces the datasets in '''4'''. A specific example of this is separation by time, in which case we have the 'default' behavior of ParaView. ParaView 'encodes' the knowledge that the group of datasets are related by time, because that's the only dimension along which we operate - iterating, filter operation ... all these things assume iteration over time. | |||

Thus, the time-encoded separation of undifferentiated data is really just a specific instance of a more general separation and iteration over data by attribute. Note that filters that assume separation by attribute ''a'' can now operate on the resulting group of data. | |||

I don't claim to have a great example of why '''3''' to '''4''' is a good idea - but deep down inside it seems that conceptually it is a good thing to admit that if we're going to bring together information visualization and scientific visualization, there needs to be a consistent method of handling time, and I believe that a more general 'attribute separation' of data is worth considering. As we move to large infoviz datasets, we will certainly be in the same boat as we are with ParaView now - in which we separate data by some attribute (time) in order to be able to process it, and to be able to present it in a way that the user understands. | |||

:The idea of attribute-based separation is certainly an intriguing concept. I'm not sure we need to spend too much time on that as one could easily implement a filter to perform the '''3''' to '''4''' operation. It's basically just a threshold operation. The only interesting part here is that this filter would have its threshold parameters set by information keys passed up from the pipeline executor. | |||

:In my mind, there is only one sticky point. In order for this to make sense, there needs to be information keys to set not just time but any attribute, and the grouped data structure must handle not just time and parts, but also grouping on any attribute. Since we don't have a use case, it does not make much sense to me to implement the generalization at this moment if it complicates the interface (which it undoubtedly would). | |||

:However, the whole concept gets me thinking (a dangerous notion). If we think about it, this mechanism makes sense for the ivars of a filter. For example, consider the isosurface filter. Consider the space of all possible outputs of the filter. This space has the three spatial dimensions, a dimension for each attribute, and a new dimension representing all possible isosurface values (actually, the current implementation would be the power set of all isosurface values). So, we can think about the isosurface filter as performing the '''3''' to '''4''' operation that groups outputs based on the isosurface value(s). So what if the mechanism for setting the isosurface values was the same as setting the time value? That might have interesting ramifications on animation controls. | |||

:OK. So conceptually that makes sense, but practically it's nonsense. First of all, to actually implement this would require changing all filters to grab all their ivar values from keys the executor passes them. The task is so large as to be impractical. Also, what happens when two filters have an ivar of the same name? For example, what if there were two isosurface filters each operating on a different field? There would have to be a mechanism to select the filter, but then how would that be any different from just setting the ivar directly on the filter itself? | |||

:So coming back full circle, maybe we already have an implementation for the '''3''' to '''4''' operation. It's the threshold filter. Granted, it would be nicer if we could select multiple ranges, but I believe Dave R. has already been working on something like that for Prism. | |||

:--[[User:Kmorel|Ken]] 08:09, 28 Jul 2006 (EDT) | |||

=== Sci. Viz. Without Regard to Time === | |||

We're already thinking about InfoViz as 'disreagarding time' (considering it just one of many attributes of data) If we extend this thinking, there are many possibilities in a time-independent SciViz world. For example: | |||

* At a particular moment in time, we'd like to highlight all cells which, at some point in the future, will become degenerate. Or, which at some point in the simulation have TEMPERATURE within a certain range. This would aid in understanding the development of the simulation. | |||

* For a simulation of an airplane landing, I'd like to see the turbulence flow over the whole aircraft for the entire length of the simulation. This would afford me a view of the entire simulation in one view - showing a 'path through time' | |||

* Taking an isosurface of a value over all timesteps would show me an envelope of that value. | |||

* There are many examples of things you could see from seeing a Muybridge-like multiple exposure picture of things over time. The best examples of this would perhaps be things that move, like a bouncing ball. | |||

* Material tracking. Given a piece of material that ended up at a certain place, what was its evolution over time? | |||

Of course, these techniques will only be useful with certain types of data, and on certain runs of data. Still, it is interesting to think about the functional overlap between SciViz and InfoViz - when we are able to combine arbitrary query-based questions with discrete representations of data, what things will we be able to study that we cannot now? | |||

Latest revision as of 07:09, 28 July 2006

OK here is my approach to this

First finalize buyin on the basic approach. There are two main approaches. The first is that a request for multiple time steps is handled by returning a multiblock dataset, one block for each time step. The second approach is to only fullfill requests for one time step at a a time. The first approach would require changes to the multiblock executive. It would tend to use a larger memory footprint (many timesteps in memory at once). The second approach is simpler in that it can work with minor changes to the current pipeline. My suggestion is to go with the second approach.

- I have two problems with the second approach: user interactivity and developer ease-of-use. The new executive in VTK 5 is slower than the old one and running the executive once for each frame in an interactive session(15+ fps) doesn't seem terribly feasible. Also, I suspect that many filters that operate on temporal data will end up creating a multiblock dataset or some equivalent in order to hold the datasets they require. That's just asking for lots of code replication and tedious programming.

- Well I hear you :) The ideal fix is to go to a true 4D representation and extent. But that would be an enormous effort. But maybe that is the direction we should head. The multiblock approach I don't think buys us much as the pipeline will just iterate over the blocks all the same. (e.g. requesting one cell for 100 time steps will result in a 100 block data set (a block is really a dataset as well) and for almost all filters the pipeline will iterate them 100 times, once for each block. That is the same basic situation as not using multiblock just depth first (100 blocks on filter 1, 100 block on filter 2) versus breadth first. Maybe there is some other approach that makes more sense.Martink 13:11, 25 Jul 2006 (EDT)

- I agree that both the single-timestep and multi-timestep pipelines have to retrieve all of the data requested in the end. My point is that on many occasions, values for multiple timesteps will be required simultaneously for processing. For example: temporal plots, temporal interpolation, temporal derivative estimation, and streakline generation. To me, that means the programming model should make multiple time steps available. I am not trying to be choosy about whether that's done at the pipeline level.

- As for 4-D grids, I am not convinced that a unique (or even canonical) 4D representation and extent exist. Which only makes the effort more enormous-looking. Specifically, with all the possible time interpolation techniques (explicit/implicit, fixed/adaptive, ...) as well as all the different mesh types (h-adaptive, hp-adaptive, ALE, XFEM) -- some of which change mesh topology as time progresses -- I don't know that a 4-D representation in the sense of a vtk4dUnstructuredGrid mesh class that performed its own temporal interpolation is feasible.

- If you want a pipeline that performs 1 timestep request at a time, how about a "3+1/2"-D mesh representation that could hold a mesh at a several instants in time, could provide spatial and temporal *derivative* information (as supplied by a reader and/or interpolation filter via pipeline requests), and could perform a lot of the tedious pipeline interactions/caching to update itself to a new point in time? With a 3.5-D mesh class like that, the pipeline might not fulfill requests for extents in time, but the mesh would make it appear to do so. Changes in mesh topology with time would be possible since it would simply be a container for multiple datasets.--Dcthomp 15:33, 25 Jul 2006 (EDT)

- If you're worried about caching problems were the pipeline to accept 4-D extents, why not introduce a "context" to the pipeline. There could be a default context and any filter that was going to ask for many timesteps with small spatial extents could request and enable a new context. That wouldn't require lots of changes to existing filters (they get the default context) and would provide a way for new filters (i.e., generating temporal line/arc plots) to request the data they require in one fell swoop.

- I'd like to see the information keys used to denote time changed a little bit. Sources should be able to advertise whether or not they are temporally continuous or discretized. Sinks will always be required to request discrete times.

- Continuous sources would be nice. Perhaps adding the notion of a source being able to specify a range of time that it supports (e.g. from T1 to T2) That leads to changing the time request to be a double as opposed to an index which would work, it might require an epsilon to be safe.Martink 13:11, 25 Jul 2006 (EDT)

- Yes, I like the idea of continuous time sources for things like camera paths... they shouldn't constrain which times are chosen. As far as epsilon comparisons, I don't think they would be required if you implement temporal interpolation as a separate filter. Discrete sources (readers, etc.) would simply return data with the closest time before the requested time. The dataset timestamp (pipeline time, not simulation time) would indicate whether or not the data was identical to some other request. Or am I missing something?--Dcthomp 15:33, 25 Jul 2006 (EDT)

- Finally, regarding interactivity... I worry that disk I/O will be a significant barrier to truly interactive framerates regardless of how fast the executive is at processing requests. It's quite possible that some combination of pre-fetching, adaptive/progressive rendering, and a new file format (along the lines of Valerio Pascucci's cache-oblivious format) will be required. And that sounds like it's beyond the project scope for the moment, but I thought I would share my angst anyway. --Dcthomp 19:35, 24 Jul 2006 (EDT)

To that end the following are tasks to be completed:

Add in time step reporting and querying using the pipeline mechanisms to the critical readers as defined by Sandia if they currently lack that support. It looks right now that the key readers do report available time steps using TIME_STEPS and it appears they also honor UPDATE_TIME_INDEX (mostly there)

Add in a method in Algorithm to specify the UpdateTimeIndex so that time can be pulled from the end of a pipeline. (easy)

Create a time interpolating filter. You specify the start time, end time, number of timesteps. The filter will retrieve input data as needed to produce the interpolated output data. Additionally it would have time shift and scale ivars so that two time varying datasets could be aligned even though their time units may have different origins or scales. (moderate)

Add an ability to request a single cell. This will be a third form of update extent. This will be useful for getting one cell across many time steps. (could be nasty, lots of interacitons with the pipeline and existing readers and extent types)

If needed, provide a pipeline hint that many time step requests will be coming. Bascially a hint that readers can use to preload the data once. (easy to add the hint, have to have smart readers reference it)

Could add a time data cache, that caches the last N time/data requests. (fairly easy)

Updated Plan and Issues

OK here is my updated approach to this (in order):

Go with a multiblock time approach incorporated into the current multiblock executive.

- Notes form the tcon-Basic agreement from folks on this. A clarification that time is the outer structure. So you can have many timesteps, each one with a mutliblock dataset.Martink 16:27, 26 Jul 2006 (EDT)

Add an information keys for specifying a continuous time range T1 to T2 as doubles Change the basic time request key to be vector of double times (UPDATE_TIMES), match within episilon or exact to be the same request?

- Make sure the discrete sources also set the T1 T2 range info.Martink 16:27, 26 Jul 2006 (EDT)

Add in and update time step reporting and querying using the pipeline mechanisms to the critical readers as defined by Sandia if they currently lack that support. It looks right now that the key readers do report available time steps using TIME_STEPS. Make them honor honor UPDATE_TIMES

Add in a method in Algorithm to specify the UpdateTimes so that time can be pulled from the end of a pipeline. (easy)

Create a time interpolating filter. The filter will retrieve input data as needed to produce the interpolated output data. Additionally it would have time shift and scale ivars so that two time varying datasets could be aligned even though their time units may have different origins or scales. (moderate)

- While only useful for some situaitons the key goal is to provide am example of a temporal interpolating filter so others can use it as a starting point.Martink 16:27, 26 Jul 2006 (EDT)

Add an ability to request a single cell. This will be a third form of update extent. This will be useful for getting one cell across many time steps. (could be nasty, lots of interacitons with the pipeline and existing readers and extent types)

- This is where we are still undecided. How to handle request for a fews cells over many time steps. Clearly there is a working slow path but what about interactive probe plots? We discussed using a information only request that skips the main dataflow. We (royal we) also suggested creating a parallel pipeline for the plot.Martink 16:27, 26 Jul 2006 (EDT)

Questions/Issues:

Will this be fast enough (especially for one cell)?

Is this practical within the time allotted?

Missing features?

Other issues?

Is the order reasonable?

- Not all these quesitons were answered but the basic slow path approach was generally agreed to. The fast plot is th eopne issue.Martink 16:27, 26 Jul 2006 (EDT)

Time support and infoviz

As we discuss time in the context of scientific visualization, it's usefull to consider how time is supported in our infoviz work. It's natural to say that time will be an attribute of the data we get from a query - one attribute among many. However, there are several issues to consider. BTW - Please excuse any duplication of discussion that's gone before - I haven't been in on the Infoviz discussions.

Example

I'm interested in looking at data about purchases during a given time period. For example:

"Let me see all purchases of Super Size Cuddle Bear Diapers at Shopmart #456 between 01 July 2006 and 10 July 2006"

This would return a set of purchases, and accompanying credit card information. That credit card information could be mapped to people, and it might turn out that Brian McWylie had purchased Cuddle Bear Diapers on three separate occasions. Note that:

- The data contains entries that are 'snapshots' of the same person at different moments in time.

- Similarly, if several credit card numbers map to the same person, the data would also contain 'snapshots' of the same person, relative to credit card numbers.

In short, the difficulty is that an entity can be represented multiply in any query - along any of the attributes that are returned with the query. Each of these cases is akin to the 'multiple timestep' problem. In scientific visualization, we're solving the multiple timestep problem by providing multiple data sets, each of which is logically separated by the dimension of 'time'. We know how to handle this, because explicitly encoded in the separation is the attribute (time) which separates them. There is no such general mechanism in infoviz, and there may by many dimensions along which the separation can occur.

Attribute-Based Separation of Data

So, Figure 1 is the basic conceptual diagram I keep coming back to. In the figure, we see several 'chunks' of data.

- 1 Is a typical Information Visualization setup, in which time is simply an attribute of the entities in the data. In this example, an entity's timestep can be 1,2 or 3.

- 2 Is a typical Scientific Visualization example, in which several datasets are grouped together, each of which represents a single moment in time (t=1,2 or 3). ParaView operates this way, with each filter considering a single moment in time. This assumption is built into such filters as isosurface filters - it's in fact part of the contract we have with the output of the filter - we expect the result to represent a single moment in time.

Consider that we could transform 1 to 2, by applying a filter that separates datasets into groups according to the value of time. ParaView will operate on data of type 2 because encoded in the 'setup' of the data is the knowledge that the attribute 'time' is what distinguishes the datasets.

A more generalized approach to these two kinds of collections is shown in 3, and 4. Data set 3 represents a typical Information visualization dataset. We want to separate them by arbitrary attribute a, which produces the datasets in 4. A specific example of this is separation by time, in which case we have the 'default' behavior of ParaView. ParaView 'encodes' the knowledge that the group of datasets are related by time, because that's the only dimension along which we operate - iterating, filter operation ... all these things assume iteration over time.

Thus, the time-encoded separation of undifferentiated data is really just a specific instance of a more general separation and iteration over data by attribute. Note that filters that assume separation by attribute a can now operate on the resulting group of data.

I don't claim to have a great example of why 3 to 4 is a good idea - but deep down inside it seems that conceptually it is a good thing to admit that if we're going to bring together information visualization and scientific visualization, there needs to be a consistent method of handling time, and I believe that a more general 'attribute separation' of data is worth considering. As we move to large infoviz datasets, we will certainly be in the same boat as we are with ParaView now - in which we separate data by some attribute (time) in order to be able to process it, and to be able to present it in a way that the user understands.

- The idea of attribute-based separation is certainly an intriguing concept. I'm not sure we need to spend too much time on that as one could easily implement a filter to perform the 3 to 4 operation. It's basically just a threshold operation. The only interesting part here is that this filter would have its threshold parameters set by information keys passed up from the pipeline executor.

- In my mind, there is only one sticky point. In order for this to make sense, there needs to be information keys to set not just time but any attribute, and the grouped data structure must handle not just time and parts, but also grouping on any attribute. Since we don't have a use case, it does not make much sense to me to implement the generalization at this moment if it complicates the interface (which it undoubtedly would).

- However, the whole concept gets me thinking (a dangerous notion). If we think about it, this mechanism makes sense for the ivars of a filter. For example, consider the isosurface filter. Consider the space of all possible outputs of the filter. This space has the three spatial dimensions, a dimension for each attribute, and a new dimension representing all possible isosurface values (actually, the current implementation would be the power set of all isosurface values). So, we can think about the isosurface filter as performing the 3 to 4 operation that groups outputs based on the isosurface value(s). So what if the mechanism for setting the isosurface values was the same as setting the time value? That might have interesting ramifications on animation controls.

- OK. So conceptually that makes sense, but practically it's nonsense. First of all, to actually implement this would require changing all filters to grab all their ivar values from keys the executor passes them. The task is so large as to be impractical. Also, what happens when two filters have an ivar of the same name? For example, what if there were two isosurface filters each operating on a different field? There would have to be a mechanism to select the filter, but then how would that be any different from just setting the ivar directly on the filter itself?

- So coming back full circle, maybe we already have an implementation for the 3 to 4 operation. It's the threshold filter. Granted, it would be nicer if we could select multiple ranges, but I believe Dave R. has already been working on something like that for Prism.

- --Ken 08:09, 28 Jul 2006 (EDT)

Sci. Viz. Without Regard to Time

We're already thinking about InfoViz as 'disreagarding time' (considering it just one of many attributes of data) If we extend this thinking, there are many possibilities in a time-independent SciViz world. For example:

- At a particular moment in time, we'd like to highlight all cells which, at some point in the future, will become degenerate. Or, which at some point in the simulation have TEMPERATURE within a certain range. This would aid in understanding the development of the simulation.

- For a simulation of an airplane landing, I'd like to see the turbulence flow over the whole aircraft for the entire length of the simulation. This would afford me a view of the entire simulation in one view - showing a 'path through time'

- Taking an isosurface of a value over all timesteps would show me an envelope of that value.

- There are many examples of things you could see from seeing a Muybridge-like multiple exposure picture of things over time. The best examples of this would perhaps be things that move, like a bouncing ball.

- Material tracking. Given a piece of material that ended up at a certain place, what was its evolution over time?

Of course, these techniques will only be useful with certain types of data, and on certain runs of data. Still, it is interesting to think about the functional overlap between SciViz and InfoViz - when we are able to combine arbitrary query-based questions with discrete representations of data, what things will we be able to study that we cannot now?